In recent years, the rapid advancement of artificial intelligence (AI) has revolutionized the field of photography. With the integration of AI into camera apps, users can now enjoy a wide range of features that enhance their photography experience. However, as AI technology becomes more sophisticated, it also raises concerns about privacy, misinformation, and the potential misuse of AI-generated content. This article explores the emerging AI photography laws and the growing necessity for deepfake detection mandates in camera apps.

The rise of AI in photography

AI has brought about significant changes in the photography industry. From automatic scene detection to real-time filters and effects, AI-powered camera apps have made it easier for users to capture and edit photos. Additionally, AI algorithms can help improve image quality, reduce noise, and even predict the best settings for a given scene.

Despite these benefits, the increasing reliance on AI in photography has sparked debates about the ethical implications of AI-generated content. With the ability to manipulate images and videos, there is a growing concern about the potential for deepfakes—AI-generated content that is indistinguishable from real footage.

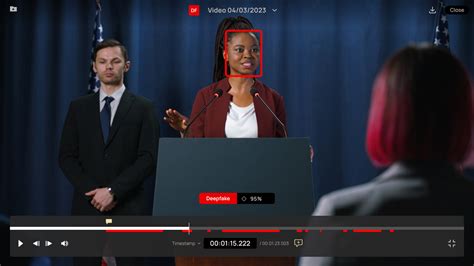

Deepfake detection: A necessity in camera apps

To address these concerns, governments and industry leaders are pushing for stricter regulations on AI photography. One of the key areas of focus is the implementation of deepfake detection mandates in camera apps.

Deepfake detection technology is designed to identify and flag manipulated content, ensuring that users can trust the authenticity of the images and videos they capture. Here are some reasons why deepfake detection is crucial in camera apps:

1. Protecting privacy: Deepfakes can be used to create fake personas or spread misinformation. By implementing deepfake detection, camera apps can help protect users’ privacy and prevent the misuse of their personal data.

2. Preventing misinformation: In an era where fake news is a significant concern, deepfake detection can help combat the spread of misinformation by ensuring that users can verify the authenticity of the content they consume.

3. Upholding ethical standards: By mandating deepfake detection, camera app developers can uphold ethical standards and promote responsible use of AI technology.

AI photography laws: The way forward

Several countries have already started to introduce AI photography laws, aiming to regulate the use of AI in photography and protect users’ rights. Here are some key aspects of these laws:

1. Transparency: Laws may require camera app developers to disclose the use of AI and deepfake detection technology to users, ensuring they are aware of the potential for manipulation.

2. User consent: Users may be required to opt-in for deepfake detection features, giving them control over how their data is used and ensuring they are informed about the potential risks.

3. Accountability: Developers and users may be held accountable for the misuse of AI-generated content, including the creation and distribution of deepfakes.

In conclusion, as AI continues to transform the photography industry, it is crucial to address the ethical implications of AI-generated content. Deepfake detection mandates in camera apps can help protect users’ privacy, prevent misinformation, and uphold ethical standards. By implementing AI photography laws, we can ensure that the benefits of AI technology are balanced with the need for responsible use and regulation.