Introduction:

In the ever-evolving landscape of integrated graphics processing units (GPUs), the role of memory hierarchy has become increasingly significant. One of the most crucial components of this hierarchy is the L4 cache, which directly impacts the performance of integrated GPUs. This article delves into the intricacies of the DRAM cache hierarchy and explores the impact of the L4 cache on integrated GPU performance.

1. Understanding the DRAM Cache Hierarchy:

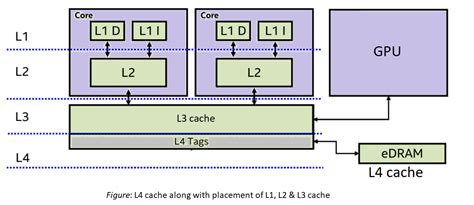

The DRAM cache hierarchy is a multi-level memory system designed to optimize the performance of integrated GPUs. It consists of several layers, including the L1, L2, L3, and L4 caches. Each cache level has a different purpose and plays a vital role in the overall performance of the GPU.

1.1 L1 Cache:

The L1 cache is the smallest and fastest cache, located on the GPU die. It is used to store frequently accessed data and instructions, reducing the latency of data retrieval from the main memory. The L1 cache is divided into two parts: L1D (data cache) and L1I (instruction cache).

1.2 L2 Cache:

The L2 cache is larger than the L1 cache and is also located on the GPU die. It serves as a buffer between the L1 cache and the L3 cache, storing data that is frequently accessed but not as frequently as the data in the L1 cache.

1.3 L3 Cache:

The L3 cache is a shared cache that is larger than the L2 cache and is located on the GPU package or system interconnect. It serves as a centralized cache for all GPU cores, providing a unified memory space for data sharing and reducing memory contention.

1.4 L4 Cache:

The L4 cache, also known as the last-level cache, is the largest cache in the hierarchy and is typically located off-chip, on the system’s main memory module. It serves as a high-capacity buffer between the GPU and the main memory, storing data that is not frequently accessed but still needs to be quickly retrieved.

2. Impact of the L4 Cache on Integrated GPU Performance:

The L4 cache plays a crucial role in the performance of integrated GPUs, particularly in scenarios where the GPU needs to access a large amount of data. Here are some key impacts of the L4 cache on integrated GPU performance:

2.1 Reducing Memory Latency:

The L4 cache helps reduce memory latency by storing frequently accessed data closer to the GPU. This minimizes the time required to retrieve data from the main memory, resulting in improved performance for tasks that involve large data sets.

2.2 Enhancing Data Throughput:

The L4 cache improves data throughput by providing a high-capacity buffer for data that is not frequently accessed but still needs to be quickly retrieved. This allows the GPU to process more data in a shorter amount of time, leading to better overall performance.

2.3 Mitigating Memory Contention:

In scenarios where multiple GPU cores are competing for access to the main memory, the L4 cache helps mitigate memory contention by providing a centralized cache for all cores. This ensures that each core has access to the required data without experiencing significant delays.

2.4 Power Efficiency:

The L4 cache contributes to power efficiency by reducing the frequency of memory accesses to the main memory. This not only improves performance but also reduces power consumption, making the integrated GPU more energy-efficient.

Conclusion:

The L4 cache is a critical component of the DRAM cache hierarchy in integrated GPUs. By reducing memory latency, enhancing data throughput, mitigating memory contention, and improving power efficiency, the L4 cache significantly impacts the performance of integrated GPUs. As the demand for high-performance integrated GPUs continues to grow, the importance of the L4 cache in optimizing GPU performance will only increase.