Introduction:

Artificial Intelligence (AI) has revolutionized various sectors, offering unprecedented advancements in technology. However, the ethical implications of AI have come under scrutiny, especially regarding the issue of bias. Hardware-level bias mitigation strategies play a crucial role in ensuring fairness and preventing discrimination in AI systems. This article explores the ethical considerations surrounding AI and the implementation of hardware-level strategies to mitigate bias.

1. Understanding Bias in AI:

Bias in AI refers to the unfair treatment or preference given to a particular group of individuals or data. It can arise from various sources, including biased training data, algorithmic design, and societal prejudices. This bias can lead to discriminatory outcomes, which have serious ethical implications.

2. The Role of Hardware in AI:

Hardware plays a crucial role in AI by enabling the efficient processing of data and the execution of AI algorithms. However, hardware components can also contribute to bias in AI systems. For instance, certain hardware configurations may favor specific data types or processing patterns, leading to inherent biases.

3. Hardware-Level Bias Mitigation Strategies:

To address the ethical concerns associated with AI bias, several hardware-level strategies have been developed. These strategies aim to identify and mitigate bias at the source, ensuring fairness and preventing discrimination. Some of the key hardware-level bias mitigation strategies include:

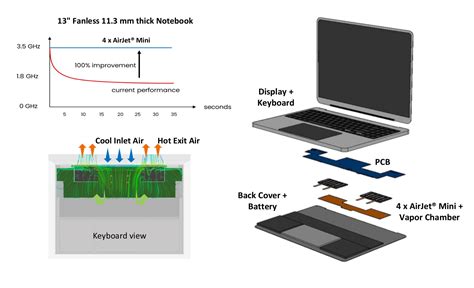

a. Hardware Acceleration:

By leveraging specialized hardware accelerators, such as Graphics Processing Units (GPUs) or Field-Programmable Gate Arrays (FPGAs), AI algorithms can be optimized for efficient processing. These accelerators can help reduce computational bias and improve the performance of AI systems.

b. Customized Hardware Design:

Designing hardware components specifically tailored to AI applications can address bias issues. For example, using custom memory configurations that minimize data-dependent biases or employing specialized processors that reduce the risk of algorithmic bias can enhance fairness in AI systems.

c. Analog Hardware:

Analog hardware can provide more flexibility in data representation and processing, which may help mitigate bias. By leveraging analog computing techniques, AI systems can better handle diverse data types and patterns, reducing the likelihood of discrimination.

d. Energy-Efficient Hardware:

Energy-efficient hardware can contribute to reducing the computational biases that arise from power consumption variations. By optimizing energy consumption, hardware-level bias mitigation strategies can ensure more consistent and fair AI processing.

4. Ethical Considerations:

While hardware-level bias mitigation strategies offer promising solutions, they also raise ethical considerations. These include:

a. Transparency: Ensuring that the hardware-level strategies used for bias mitigation are transparent and understandable to both developers and end-users is crucial. This allows for better accountability and trust in AI systems.

b. Fairness: It is essential to ensure that the hardware-level strategies used for bias mitigation are fair and do not inadvertently introduce new biases or discrimination.

c. Collaboration: Collaboration between hardware designers, AI developers, ethicists, and policymakers is essential to address the ethical challenges associated with hardware-level bias mitigation strategies.

Conclusion:

AI ethics is a critical concern in the development and deployment of AI systems. Hardware-level bias mitigation strategies play a significant role in addressing ethical challenges by ensuring fairness and preventing discrimination. By understanding the role of hardware in AI and implementing appropriate strategies, we can create a more ethical and responsible AI ecosystem. However, it is essential to consider the ethical implications of these strategies and collaborate across disciplines to ensure a fair and unbiased AI future.